Clustered Samba

Introduction

NOTE! A new cluster system for Samba called 'CTDB' has now been implemented. See http://ctdb.samba.org/ for details

Current Samba3 implementations can't be used directly on clustered systems because they are oriented to single-server systems. The main reason is that smbd processes use TDB (local-oriented database) for messaging, storing shared data, etc - there is no way to coordinate smbd processes run on different cluster nodes.

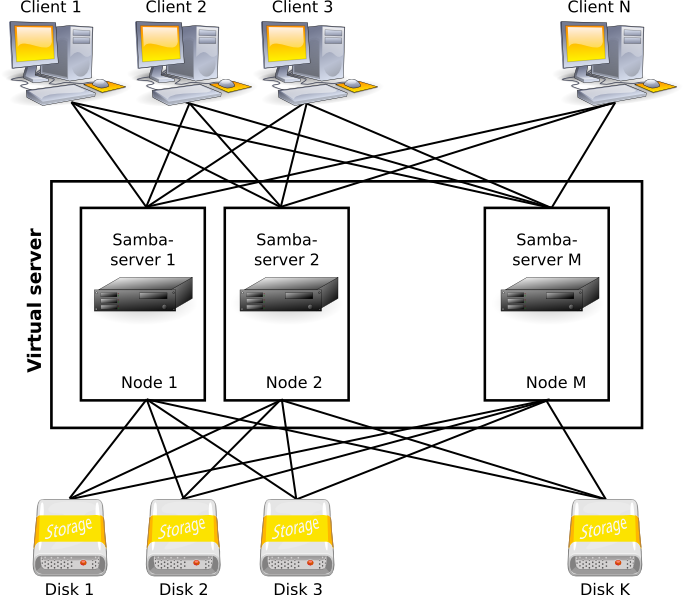

A simple Clustered Samba system looks like this:

(you can get all images' SVG sources here - [1])

Each node has its own smbd daemon - they should communicate with each other to avoid shared data corruption, treat oplocks and so on. So the most problem is the extending of the locking subsystem (share mode locks, opportunistic locks, byte-range locks) to multi-node system and cluster point of view.

Cluster Implementation Requirements

This section lists a number of functional requirements that must be addressed by any Clustered Samba implementation.

Data integrity

Multiple Samba servers running on different cluster members and serving the same cluster filesystem should cooperate to ensure that users' data is no less safe than a standalone configuration.

Zero configuration

The extra administration required to set up a ClusteredSamba should be minimised, to nothing if possible. This means that the administrator should not have to configure any cluster membership in addition to that already configured in the underlying cluster. The administrator should not have to configure any setting that can be derived from the underlying cluster (eg. private interfaces).

Single set of binaries

Clustering should be a runtime option. That is, vendors should not have to ship separate sets of binaries for cluster and standalone installations.

Cluster independence

The implementation should work on a variety of clustering technologies. As a guideline, the clustering implementation should have acceptable results for a set of nodes sharing a cross-mounted NFS filesystem.

This also means that the implementation must not rely on a cluster implementing any special CIFS features like share modes, file change notifications, oplocks, etc. The implementation should be able to take advantage of special cluster features if they exist, however.

Across the Samba community, it will be necessary to support CXFS, GFS and GPFS.

Cluster membership

The implementation should tolerate arbitrary fluctuations in cluster membership. Specifically, this means that an implementation that relies on a single special node (eg. to arbitrate share mode access) must be able to tolerate the (possibly permanent) absence of that node.

Fault tolerance

The implementation should tolerate different degrees of reliability amongst cluster members, eg. node A might be really flaky but node B is ok. This tends to imply a loosely coupled model.

Scalability

It is acknowledged that certain workloads will not scale due to the underlying cluster technology. However, the Samba cluster should be designed such that all workloads might scale, since different clusters have different scalability characteristics and the scalability of even the same cluster technology might change across versions.

Workloads that must scale are:

- Multiple clients doing IO to disjoint subtrees

- Multiple clients all reading the same files (ie. renderwall load)

Performance

A key performance criterion for the implementation is latency. The implementation should be designed to minimise the latency of any checks that might require cross-cluster communication.

When applied to a single node, the implementation should not provide substantially worse performance than a non-cluster version of Samba.

The Cluster Synchronization Problem

The fundamental problem in implementing a clustered Samba is distributing the Samba state across the cluster.

Only a portion of the state Samba associates with each file is pushed down into the filesystem. We can assume that the state that is pushed into the filesystem is distributed by the cluster itself. This leaves us with the problem of distributing information that is stored outside the filesystem.

From the point of view of providing strong data integrity, the primary information we need to be concerned about is the locking state. There is an implicit requirement to ensure that any information we manually distribute across the cluster is node-independent.

Distributing Locking State

Here we'll gather information on how locking mechanisms are done in Samba3.

Locking is used in different file operations (opening, closing, deleting), but the main place is huge open_file_ntcreate function (in fact, open_file_ntcreate gives high-level interface to share access testing).

Share mode information consist of two different parts: share mode info and deferred opens info. The former is a collection of file sharing parameters (file name, list of processes opened the file, oplock info, etc). The latter is the part of the deferring open calls mechanism.

All locking operations are presented on the scheme Image:Samba_locking_calls.png (based on code analysis of get_share_mode_lock function).

Original smbd's locking operations include:

- local file operations (file renaming and deleting, posix locking, etc);

- modifications of locking storage (locking.tdb);

- sending messages to other lock holders

All this actions are made under a tdb chain lock to avoid file system or locking.tdb conflict.

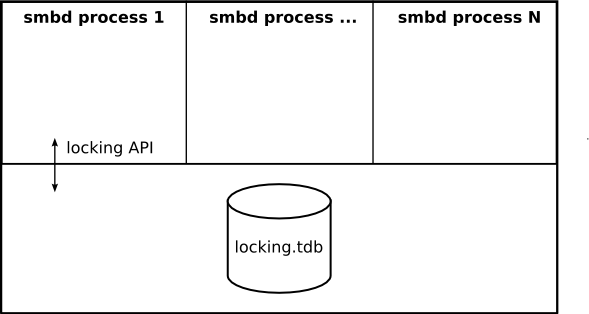

Present locking mechanism uses single locking.tdb (database with shared information - share mode entries, deferred open entries, flags, etc) for all smbd processes:

Node-Independent State Information

The information that Clustered Samba needs to distribute across the cluster varies widely. Effectively, this means that the contents of every message type need to be carefully designed to ensure that it will be valid in a cluster context.

For example,

- a ino_t, dev_t tuple is not valid across a CXFS cluster because the dev_t will be different on different cluster nodes.

- in Tru64 cluster, the pid_t given as an argument to kill(2) is valid across the cluster, but the same is not true for CXFS or GPFS.

There are many traps of this kind, so the cluster arhitecture needs to be carefully considered, and appropriate abstractions need to be put in place (eg. the process_id type) to make sure that cluster functionality is not broken by development on the standalone server.

Clustered Samba Prototypes

Three prototypes of a clustered Samba implementation have been developed in branches on svn.samba.org:

* http://viewcvs.samba.org/cgi-bin/viewcvs.cgi/branches/tmp/jpeach-cluster/ * http://viewcvs.samba.org/cgi-bin/viewcvs.cgi/branches/tmp/vl-cluster/ * http://viewcvs.samba.org/cgi-bin/viewcvs.cgi/branches/tmp/vl-messaging/

Both of these prototypes take a shared TDB approach to distributing locking state. In this approach, all cluster nodes access the same TDB files. The cluster filesystem takes care of ensuring the TDB contents are consistent across the cluster. Bothe prototypes include extensive modifications to Samba internal data representations to make the information stored in various TDBs node-independent.

The shared TDB approach is simple in terms of the implementation effort required, but it does not meet out performance criteria. The primary reason for this is that the design fundamentally depends on token thrashing behaviour as every cluster node is making updates to the same TDB file. The prototypes attempt to minimise this impact by virtualising the TDB interface and splitting each TDB into a number of separate files. This postpones, but does not alleviate, the problems caused by token thrashing.

Proposed Cluster Architecture

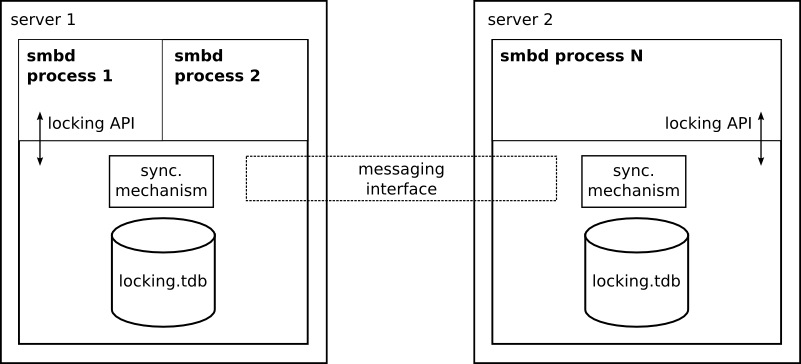

On clustered systems internal messaging can be used to interconnect smbd processes and locking databases:

The first problem with using the SAN as shared storage is performance. If we simply relocate the TDB files to the SAN, we force multiple cluster nodes to access the same files for both read and write. In most cluster implementations this will cause token bouncing and poor performance. There will tend to be high latency for TDB accesses and scalability will be poor for certain workloads.

Fundamentally, any algorithm that involves multiple nodes synchronising on the shared filesystem will suffer from two performance drawbacks:

- it unpredictably increases the liklihood of token thrashing

- the performance characterisitics will differ across different cluster implementations

The second problem with using the SAN for IPC is that this will tend to trigger rarely used code paths in the cluster. Depending on the cluster in question, it may make the overall system more susceptible to deadlocks.

Application-level locking

We can alleviate the problems associated with shared filesystem IPC by designating a single node to be an authoritative arbiter of locking state access. We call this node the locking leader.

Instead of accessing the TDBs directly, cluster nodes send a locking query directly to the locking leader. This has good latency characteristics, since the cost of setting or checking a share mode is a single network round trip, whereas accessing a shared TDB file might cause round trips for multiple tokens. A further advantage of an application-specific locking protocol is that the protocol can be specifically designed for low latency.

Relocation and Recovery

The disadvantage of this locking scheme is that we suddenly have to deal with membership fluctuations. That is, if the current locking leader loses cluster membership we need to relocate that role to a different cluster member.

The key to avoiding a complicated and error-prone distributed algorithm is to notice that all types of cluster has some kind of identified to uniquely name each member node. We can take advantage of the ordering implicit in this naming to statically define a policy that can be used to determine the current locking leader.

In CXFS, we could say that the MDS or the CMS leader is always the locking leader. In a cluster of peer nodes, we could say that the lowest numbered node is always the oracle. All that is necessary is that nodes must be able to determine the leadership order (and hence the current leader) without requiring the agreement of any other node. This means we don't have to maintain a separate Samba cluster membership in sync with the underlying cluster membership.

If the current locking leader fails, the next cluster member in our static ordering becomes the leader. If the distributed state has a persistent backing store in the shared filesystem, the new locking leader can taked over without any interruption in service. There are few performance implications to backing the distributed state in the shared filesystem, since only the locking leader is ever updating this state. We assume that any metadata tokens will be migrated to the locking leader, allowing us to approximate the latencies of local filesystems.

If a higher-order cluster member joins the cluster, we have a dilemma. Does the new member become the locking leader or does the role stay with the current leader? I propose that that the current leader should relinquish the role of locking leader as soon as it becomes aware that a better candidate is present. This complicates the implementation a little, but makes the cluster rules consistent, which is generally a win.

Note that this scheme relies on all cluster members having access to accurate and timely cluster membership information. I believe that this is not a very onerous requirement, since all clusters that I am aware of already maintain their own membership state. Also note that this does not introduce any additional membership state or algorithms.

Absent cluster members: There may be administrative reasons for not enabling Samba on every node of the cluster. In this case the locking leadership order might have holes.

In the case of a cluster where the locking leader can be present on any node, this could mean that finding the locking leader could take up to N network probes (in the absence of a cleverer mechanism). This might be acceptable provided that the locking leader does not relocate too often.