Samba CTDB GPFS Cluster HowTo: Difference between revisions

| (37 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

=Creating a Samba Cluster with CTDB and GPFS on CENTOS= |

|||

Want to build a scalable networked storage system that is always available ?, A system that integrates with your Active Directory, supports SMB2 protocol and ACL's. Then read on... |

|||

== '''Creating a Samba Cluster with CTDB and GPFS on Centos''' == |

|||

== Warning: Out of date!!! == |

|||

'''Currently being written''' |

|||

Please note that this guide is very old and doesn't contain the latest instructions for configuration CTDB and Samba. For the parts covering CTDB and Samba specific configuration please follow the various sections under [[CTDB and Clustered Samba]]. |

|||

'''Assumed knowledge/ Difficulty''' |

|||

==Assumed knowledge== |

|||

This guide is written to guide a relativley inexperienced users through the setup of a fairly complex system in a step by step fashion. However, for the sake brevity i'm going to assume you are reasonably comfortable with GNU\Linux, can use Vim, Emacs or Nano. Have a grasp of basic networking etc.. and are not afraid of compiling some code :) . Elements of this setup are somewhat interchangeable. You could probably replace CENTOS with another distro but would subsequently need to be familiar enought with that distro to be able to modify the approptiate commands and paths. Others may wish to replace GPFS with alternate clustered filesystem or use a variation of my samba configuration below. This setup has been tested in a non production enviornment. Deploying as such in a production enviornment is at '''your''' own risk and the author as such assumes no responsibility . |

|||

This guide is written to assist a relatively inexperienced users through the setup of a fairly complex system in a step by step fashion. However, for the sake brevity I'm going to assume you are reasonably comfortable with GNU\Linux, can use Vim, Emacs or Nano. Have a grasp of basic networking etc.. and are not afraid of compiling some code :) . Elements of this setup are somewhat interchangeable. You could probably replace CENTOS with another distro but would subsequently need to be familiar enough with that distro to be able to modify the appropriate commands and paths. Others may wish to replace GPFS with alternate clustered file system or use a variation of my Samba configuration below. This setup has been tested in a non production environment. Deploying as such in a production environment is at your own risk and the author as such assumes no responsibility . |

|||

Want scalable networks file system that is always on ?, something that integrates with your Active directory and supports SMB2 , and access control lists. then read on |

|||

==Preparing the Servers== |

|||

This is a simple test setup. So i’m going to use a couple of KVM VM’s but the same principles should apply . This approach should scale to many physical/ virtual servers in a production environment. |

|||

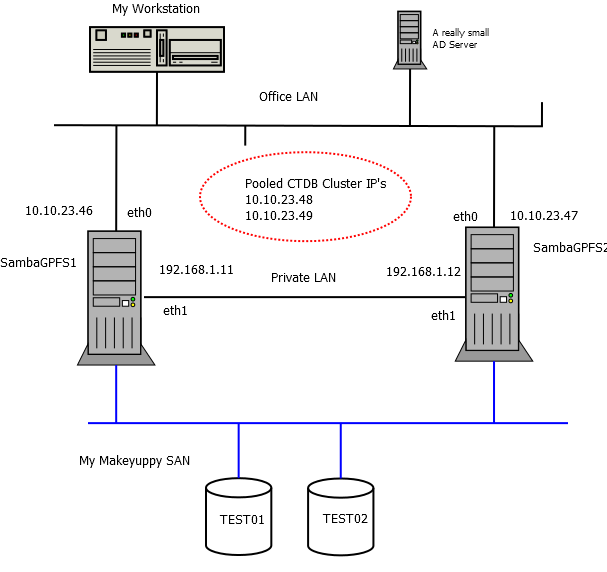

This is a simple test setup. I’m going to use a couple of KVM VM’s but the same principles should apply on bare metal . This approach should scale to many physical/virtual servers . The diagram below illustrates our setup. |

|||

Install Centos 6. |

|||

First we want to install a couple of servers. I have chosen Centos as it is binary compatible with RHEL 6 which is well supported by both GPFS and Samba. |

|||

Create a couple of CENTOS 6 VM’s (I use virt-manager on CENTOS 6 but you can use any tools you like) . I use an ISO image CentOS-6.4-x86_64-minimal.iso. The VM will have 1 CPU and 1G RAM and an 8Gig disk . Initially i allocate 1 NIC but i add a further NIC for a private LAN later. |

|||

Select all the usual defaults. Select the option to manually configure networking as we are going to coufigure this after install. Also make sure you install the ssh server. Once the servers are installed we need to set the IP address. You can do this by editing /etc/sysconfig/network-scripts/ifcfg-eth0 file and setting static ip addresses .These are the publically accessible ip addresses of the servers. For reference here is the ifcfig-eth0 file on the sambagpfs1 server : |

|||

DEVICE=eth0 |

|||

HWADDR=52:54:00:D1:C5:25 |

|||

TYPE=Ethernet |

|||

UUID=0b83419e-f28a-4a6d-84e5-64c813bf4f51 |

|||

ONBOOT=yes |

|||

NM_CONTROLLED=yes |

|||

IPADDR="10.10.23.46" |

|||

NETMASK="255.255.255.0" |

|||

GATEWAY="10.10.23.253" |

|||

[[File:sambagpfs.png]] |

|||

Don’t forget to set a valid dns server in /etc/resolv.conf also. Once you're sure you have a working network connection, install the latest updates with the yum update command. |

|||

Configure shared disks |

|||

In virt-manager create a couple of 1GB IDE disks for our GPFS cluster. When you try to add the disk to the 2nd server (sambagpfs2) virt-manager will give you a warning that “the disk is already in use by another guest” but this is ok. We are building a clustered filesystem where shared access to the underlying disks is necessary. |

|||

===Install CENTOS 6.=== |

|||

In a production scenario these disks would usually be shared LUN’s on a SAN. When you reboot your servers you should see the additional disks as reported by the dmesg command. You should see something like : |

|||

sd 0:0:0:0: [sda] 2048000 512-byte logical blocks: (1.04 GB/1000 MiB) |

|||

First we want to install a couple of servers. I have chosen CENTOS 6 as it is binary compatible with RHEL 6 which is well supported by both GPFS and Samba. |

|||

sd 0:0:1:0: [sdb] 2048000 512-byte logical blocks: (1.04 GB/1000 MiB) |

|||

Create a couple of CENTOS 6 VM’s (I use virt-manager on CENTOS 6 but you can use any tools you like) . I use an ISO image CentOS-6.4-x86_64-minimal.iso. The VM will have 1 CPU and 1G RAM and an 8Gig disk . Initially I allocate 1 NIC but we will add a further NIC for a private LAN later. |

|||

Select all the usual defaults. Select the option to manually configure networking as we are going to configure this after install. Also make sure you install the SSH server. Once the servers are installed we need to set the IP address. You can do this by editing ''/etc/sysconfig/network-scripts/ifcfg-eth0'' file and setting static IP addresses .These are the publicly accessible IP addresses of the servers. For reference here is the ifcfig-eth0 file on the sambagpfs1 server : |

|||

DEVICE=eth0 |

|||

HWADDR=52:54:00:D1:C5:25 |

|||

TYPE=Ethernet |

|||

UUID=0b83419e-f28a-4a6d-84e5-64c813bf4f51 |

|||

ONBOOT=yes |

|||

NM_CONTROLLED=yes |

|||

IPADDR="10.10.23.46" |

|||

NETMASK="255.255.255.0" |

|||

GATEWAY="10.10.23.253" |

|||

Don’t forget to set a valid DNS server in /etc/resolv.conf also. Once you're sure you have a working network connection, install the latest updates with the ''yum update'' command. |

|||

===Configure shared disks=== |

|||

In virt-manager create a couple of 1GB IDE disks for our GPFS Cluster. When you try to add the disk to the 2nd server (sambagpfs2) virt-manager will give you a warning that “the disk is already in use by another guest” but this is OK. We are building a clustered file system where shared access to the underlying disks is necessary. |

|||

In a production scenario these disks would usually be shared LUN’s on a SAN. When you reboot your servers you should see the additional disks as reported by the ''dmesg'' command. |

|||

You should see something like : |

|||

sd 0:0:0:0: [sda] 2048000 512-byte logical blocks: (1.04 GB/1000 MiB) |

|||

sd 0:0:1:0: [sdb] 2048000 512-byte logical blocks: (1.04 GB/1000 MiB) |

|||

Make sure you can see both disks from both servers (sambagpfs1 and sambagpfs2). |

Make sure you can see both disks from both servers (sambagpfs1 and sambagpfs2). |

||

Disable SELINUX and iptables |

===Disable SELINUX and iptables=== |

||

There appears to be a communication problem between the GPFS Daemons when SELINUX is enabled. Edit the /etc/selinux/config file and set SELINUX=disabled. |

|||

There appears to be a communication problem between the GPFS Daemons when SELINUX is enabled. Edit the ''/etc/selinux/config'' file and set SELINUX=disabled. |

|||

Also stop the iptables server and disable it on restart |

|||

Also stop the iptables server and disable it on restart. |

|||

service iptables stop |

|||

chkconfig iptables off |

|||

service iptables stop |

|||

chkconfig iptables off |

|||

It goes without saying that you need to consider these steps more carefully in a production environment. |

It goes without saying that you need to consider these steps more carefully in a production environment. |

||

Create an addition network card and set up passwordless login |

|||

===Create an addition network card and set up password less login=== |

|||

Create an additional network card in virt-manager for each of our guests. These NIC’s are for communication (GPFS Stuff) between the guests so we place them on the Virtual network ‘default’ NAT. Give |

|||

Create an additional network card in virt-manager for each of our guests. These NIC’s are for communication (GPFS and CTDB Stuff) between the guests so we place them on the Virtual network ‘default’ NAT. Give |

|||

the NIC’s sensible addresses, something like 192.168.1.x .. |

the NIC’s sensible addresses, something like 192.168.1.x .. |

||

Create a file /etc/sysconfig/network-scripts/ifcfg-eth1 |

Create a file ''/etc/sysconfig/network-scripts/ifcfg-eth1'' for the new network interface . Sample config below : |

||

DEVICE=eth1 |

|||

TYPE=Ethernet |

|||

ONBOOT=yes |

|||

NM_CONTROLLED=yes |

|||

IPADDR="192.168.1.11" |

|||

NETMASK="255.255.255.0" |

|||

DEVICE=eth1 |

|||

Generate the ssh key with the ssh-keygen -t rsa command on sambagpfs1 and copy to the other server using the command ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.1.12 . |

|||

TYPE=Ethernet |

|||

ONBOOT=yes |

|||

NM_CONTROLLED=yes |

|||

IPADDR="192.168.1.11" |

|||

NETMASK="255.255.255.0" |

|||

Generate the ssh key with the ''ssh-keygen -t rsa'' command on sambagpfs1 and copy to the other server using the command ''ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.1.12'' . |

|||

Also in the ~/.ssh folder run cat id_rsa.pub >> authorized_keys . This is necessary as our GPFS setup uses the ssh shell to execute commands locally as well as remotley. Otherwise we will be prompted for a login on the local machine when executing GPFS commands . |

|||

Also in the ~/.ssh folder run ''cat id_rsa.pub >> authorized_keys'' . This is necessary as our GPFS setup uses the ssh shell to execute commands locally as well as remotely. Otherwise we will be prompted for a login on the local machine when executing GPFS commands . |

|||

Now |

Now, perform the inverse of the above on the second server gpfstest2. You should now be able to zip between the two servers with the ''ssh <IP address>'' command. If not use the -v switch in the ssh command to debug. |

||

Hostnames in hosts file |

===Hostnames in hosts file=== |

||

Add your hostnames and internal ip addresses to the /etc/hosts file . My settings displayed below for reference. |

|||

192.168.1.11 sambagpfs1 |

|||

192.168.1.12 sambagpfs2 |

|||

10.10.23.48 smbgpfscluster |

|||

10.10.23.49 smbgpfscluster |

|||

Add your hostnames and internal IP addresses to the /etc/hosts file . My settings displayed below for reference. |

|||

192.168.1.11 sambagpfs1 |

|||

192.168.1.12 sambagpfs2 |

|||

10.10.23.48 smbgpfscluster |

|||

10.10.23.49 smbgpfscluster |

|||

===Keep time synchronised=== |

|||

Keep time synchronised |

|||

Install the ntp service : |

Install the ntp service : |

||

yum install ntp |

|||

''yum install ntp'' |

|||

Set your local ntp server in /etc/ntp.conf . Set service to start on boot : |

Set your local ntp server in /etc/ntp.conf . Set service to start on boot : |

||

chkconfig ntpd on |

|||

''chkconfig ntpd on'' |

|||

and start the service : |

and start the service : |

||

service ntpd start |

|||

''service ntpd start'' |

|||

Check the time is synchronised using the date command. |

Check the time is synchronised using the date command. |

||

Now we are just about ready to begin installing GPFS :) . |

Now we are just about ready to begin installing GPFS :) . |

||

== Install and configure GPFS == |

|||

Installing GPFS and dependencies |

|||

Important !. Ensure you have the appropriate licensing before installing GPFS. For more information see http://publib.boulder.ibm.com/infocenter/clresctr/vxrx/index.jsp?topic=%2Fcom.ibm.cluster.gpfs.doc%2Fgpfs_faqs%2Fgpfsclustersfaq.html |

Important !. Ensure you have the appropriate licensing before installing GPFS. For more information see http://publib.boulder.ibm.com/infocenter/clresctr/vxrx/index.jsp?topic=%2Fcom.ibm.cluster.gpfs.doc%2Fgpfs_faqs%2Fgpfsclustersfaq.html |

||

| Line 92: | Line 117: | ||

Copy the appropriate GPFS related rpm’s onto your servers. You must install the base GPFS rpm’s and then install the patch rpm’s |

Copy the appropriate GPFS related rpm’s onto your servers. You must install the base GPFS rpm’s and then install the patch rpm’s |

||

Machine 1 - sambagpfs1 |

===Machine 1 - sambagpfs1=== |

||

First of all we need to install some dependencies necessary to install GPFS and build the portability layer rpm : |

First of all we need to install some dependencies necessary to install GPFS and build the portability layer rpm : |

||

yum install perl rsh ksh compat-libstdc++-33 make kernel-devel gcc gcc-c++ rpm-build |

|||

''yum install perl rsh ksh compat-libstdc++-33 make kernel-devel gcc gcc-c++ rpm-build'' |

|||

Now, install the GPFS base rpms : |

Now, install the GPFS base rpms : |

||

rpm -ivh gpfs.*0-0* |

|||

''rpm -ivh gpfs.*0-0*'' |

|||

then the patch rpm’s : |

then the patch rpm’s : |

||

rpm -Uvh gpfs.*0-14*.rpm |

|||

''rpm -Uvh gpfs.*0-14*.rpm'' |

|||

Build the Portability layer : |

Build the Portability layer : |

||

yum install |

''yum install'' |

||

make LINUX_DISTRIBUTION=REDHAT_AS_LINUX Autoconfig |

|||

''make LINUX_DISTRIBUTION=REDHAT_AS_LINUX Autoconfig'' |

|||

make World |

|||

make rpm (Note it’s not best practice to build rpm as root) |

|||

''make World'' |

|||

This will build an rpm for the portability layer . The benefit of building this rpm is that we will not need to as many prerequisites in our other cluster members. |

|||

''make rpm'' (Note it’s not best practice to build rpm as root) |

|||

This will build an rpm for the portability layer . The benefit of building this rpm is that we will not need as many prerequisites in our other cluster members. |

|||

Install the portability rpm. |

Install the portability rpm. |

||

rpm -Uvh /root/rpmbuild/RPMS/x86_64/gpfs.gplbin-2.6.32-358.18.1.el6.x86_64-3.4.0-14.x86_64.rpm |

|||

''rpm -Uvh /root/rpmbuild/RPMS/x86_64/gpfs.gplbin-2.6.32-358.18.1.el6.x86_64-3.4.0-14.x86_64.rpm'' |

|||

TODO - It is bad practice to build as root. See http://serverfault.com/questions/10027/why-is-it-bad-to-build-rpms-as-root . As this is a non production test system i’m going to give myself a pass (for the moment) |

|||

In general it is bad practice to build as root. See http://serverfault.com/questions/10027/why-is-it-bad-to-build-rpms-as-root . As this is a non production test system i’m going to give myself a pass (for the moment). |

|||

Finally, copy the portability rpm to the other cluster servers, in this case sambagpfs2 . |

Finally, copy the portability rpm to the other cluster servers, in this case sambagpfs2 . |

||

Machine 2 |

===Machine 2 - sambagpfs2=== |

||

Install some dependencies : |

Install some dependencies : |

||

yum install perl rsh ksh compat-libstdc++-33 |

|||

''yum install perl rsh ksh compat-libstdc++-33'' |

|||

the base rpm’s : |

the base rpm’s : |

||

rpm -ivh gpfs.*0-0* |

|||

''rpm -ivh gpfs.*0-0*'' |

|||

the patch rpm’s : |

the patch rpm’s : |

||

rpm -Uvh gpfs.*0-14*.rpm |

|||

''rpm -Uvh gpfs.*0-14*.rpm'' |

|||

Install the portability rpm. |

|||

rpm -Uvh gpfs.gplbin-2.6.32-358.18.1.el6.x86_64-3.4.0-14.x86_64.rpm |

|||

Install the portability RPM. |

|||

Updating your path to include the GPFS administration commands |

|||

''rpm -Uvh gpfs.gplbin-2.6.32-358.18.1.el6.x86_64-3.4.0-14.x86_64.rpm'' |

|||

Add the gpfs commands to the path (you don’t strictly need to do this but it makes administration of GPFS more convenient !). |

|||

Edit you .bash_profile and set the path to something like : |

|||

PATH="/usr/lpp/mmfs/bin:${PATH}:$HOME/bin" |

|||

===Update your path to include the GPFS administration commands=== |

|||

and run source .bash_profile to update your path |

|||

Add the GPFS commands to the path (you don’t strictly need to do this but it makes administration of GPFS more convenient !). |

|||

Edit you .bash_profile and set the path to something like : |

|||

PATH="/usr/lpp/mmfs/bin:${PATH}:$HOME/bin" |

|||

and run source .bash_profile to update your path |

|||

Creating the GPFS Cluster |

|||

===Creating the GPFS Cluster=== |

|||

Create a file gpfsnodes.txt containing information about our GPFS cluster nodes |

Create a file gpfsnodes.txt containing information about our GPFS cluster nodes |

||

sambagpfs1:manager-quorum: |

sambagpfs1:manager-quorum: |

||

sambagpfs2:manager-quorum: |

sambagpfs2:manager-quorum: |

||

Create the test cluster using the mmcrcluster command. |

|||

''mmcrcluster -N gpfsnodes.txt -p sambagpfs1 -s sambagpfs2 -r /usr/bin/ssh -R /usr/bin/scp -C SAMBAGPFS -A'' |

|||

Create our test cluster using the mmcrcluster command. |

|||

mmcrcluster -N gpfsnodes.txt -p sambagpfs1 -s sambagpfs2 -r /usr/bin/ssh -R /usr/bin/scp -C SAMBAGPFS -A |

|||

This sets sambagpfs1 as the primary server and sambagpfs2 as the secondary server. -A specifies that the GPFS daemons start on boot. |

This sets sambagpfs1 as the primary server and sambagpfs2 as the secondary server. -A specifies that the GPFS daemons start on boot. |

||

| Line 158: | Line 192: | ||

Accept the license for you servers : |

Accept the license for you servers : |

||

mmchlicense server --accept -N sambagpfs1 |

''mmchlicense server --accept -N sambagpfs1'' |

||

mmchlicense server --accept -N sambagpfs2 |

''mmchlicense server --accept -N sambagpfs2'' |

||

Use the mmlscluster to verify that the cluster has been created correctly. |

Use the mmlscluster command to verify that the cluster has been created correctly. |

||

Now create our NSD’s for our two shared disks /dev/sda and /dev/sdb. |

Now create our NSD’s for our two shared disks /dev/sda and /dev/sdb. |

||

Firstly create two files nsd.txt |

Firstly create two files, nsd.txt : |

||

sda:sambagpfs1,sambagpfs2::dataAndMetadata:1:test01:system |

|||

sda:sambagpfs1,sambagpfs2::dataAndMetadata:1:test01:system |

|||

and nsd2.txt |

and nsd2.txt |

||

sdb:sambagpfs2,sambagpfs1::dataAndMetadata:1:test02:system |

sdb:sambagpfs2,sambagpfs1::dataAndMetadata:1:test02:system |

||

Now issue the command : |

Now issue the command : |

||

mmcrnsd -F nsd.txt |

|||

''mmcrnsd -F nsd.txt'' |

|||

and |

and |

||

mmcrnsd -F nsd2.txt |

|||

''mmcrnsd -F nsd2.txt'' |

|||

Use the mmlsnsd command to verify creation. |

Use the mmlsnsd command to verify creation. |

||

| Line 183: | Line 220: | ||

Start up the cluster : |

Start up the cluster : |

||

mmstartup -a |

''mmstartup -a'' |

||

Check the cluster is up with mmgetstate -a |

Check the cluster is up with'' mmgetstate -a'' |

||

Create the GPFS file system : |

Create the GPFS file system : |

||

mmcrfs /dev/sambagpfs -F nsd.txt -A yes -B 256K -n 2 -M 2 -r1 -R2 -T /sambagpfs |

''mmcrfs /dev/sambagpfs -F nsd.txt -A yes -B 256K -n 2 -M 2 -r1 -R2 -T /sambagpfs'' |

||

Add the second disk to the filesystem : |

Add the second disk to the filesystem : |

||

mmadddisk sambagpfs -F nsd2.txt |

''mmadddisk sambagpfs -F nsd2.txt'' |

||

Use the mmlsnsd to verify that the two disk have been added to the cluster file system . You should see something similar to the following : |

Use the mmlsnsd to verify that the two disk have been added to the cluster file system . You should see something similar to the following : |

||

File system Disk name NSD servers |

File system Disk name NSD servers |

||

--------------------------------------------------------------------------- |

--------------------------------------------------------------------------- |

||

sambagpfs test01 sambagpfs1,sambagpfs2 |

sambagpfs test01 sambagpfs1,sambagpfs2 |

||

sambagpfs test02 sambagpfs2,sambagpfs1 |

sambagpfs test02 sambagpfs2,sambagpfs1 |

||

| Line 206: | Line 244: | ||

Shut down GPFS to add the tie breaker disk. |

We only have 2 nods in our GPFS Cluster so we need to set a tie breaker disk . Shut down GPFS to add the tie breaker disk. |

||

mmshutdown -a |

''mmshutdown -a'' |

||

mmchconfig tiebreakerdisks=test01 |

''mmchconfig tiebreakerdisks=test01'' |

||

To ensure recent versions of Microsoft Excel work correctly over SMB2 we also need to set the following configuration in GPFS (Many thanks to Dan Cohen at IBM - XIV for this tip). |

To ensure recent versions of Microsoft Excel work correctly over SMB2 we also need to set the following configuration in GPFS (Many thanks to Dan Cohen at IBM - XIV for this tip). |

||

mmchconfig cifsBypassShareLocksOnRename=yes -i |

''mmchconfig cifsBypassShareLocksOnRename=yes -i'' |

||

Start up our GPFS Cluster again : |

Start up our GPFS Cluster again : |

||

mmstartup -a |

''mmstartup -a'' |

||

Mount the GPFS filesystem : |

Mount the GPFS filesystem : |

||

mmmount sambagpfs -a |

''mmmount sambagpfs -a'' |

||

At this point you should see the GPFS file system mounted at /sambagpfs on both of our servers. Congratulations !. Take a break, stand up and walk around for a bit. |

At this point you should see the GPFS file system mounted at /sambagpfs on both of our servers. Congratulations !. Take a break, stand up and walk around for a bit. |

||

== Install and Configure Samba and CTDB == |

|||

I use the SerNet Samba 4 RPM’s from http://enterprisesamba.com/ . You have to register at the site (free) to download the RPM’s. There appears to be a problem with Windows 7 (which uses SMB2 if available on the server) on the latest release 4.0.10 so I am currently using the 4.0.9 release . I have yet to test with 4.1. Once you register you can find the 4.0.9 rpm’s at https://download.sernet.de/packages/samba/old/4.0/rpm/4.0.9-5/centos/6/x86_64/ |

|||

Installing Samba |

|||

I use the Sernet Samba 4 RPM’s from http://enterprisesamba.com/ . You have to register at the site (free) to download the RPM’s. There appears to be a problem with Windows 7 (which uses SMB2 if available on the server) on the latest release 4.0.10 so i am currently using the 4.0.9 release . You will need to perform this task on both of your servers. |

|||

Once you register you can find the 4.0.9 rpm’s at https://download.sernet.de/packages/samba/old/4.0/rpm/4.0.9-5/centos/6/x86_64/ |

|||

You need to download the following rpm’s : |

You need to download the following rpm’s : |

||

sernet-samba-libwbclient0-4.0.9-5.el6.x86_64 |

sernet-samba-libwbclient0-4.0.9-5.el6.x86_64 |

||

sernet-samba-libsmbclient0-4.0.9-5.el6.x86_64 |

sernet-samba-libsmbclient0-4.0.9-5.el6.x86_64 |

||

sernet-samba-4.0.9-5.el6.x86_64 |

sernet-samba-4.0.9-5.el6.x86_64 |

||

sernet-samba-common-4.0.9-5.el6.x86_64 |

sernet-samba-common-4.0.9-5.el6.x86_64 |

||

sernet-samba-libs-4.0.9-5.el6.x86_64 |

sernet-samba-libs-4.0.9-5.el6.x86_64 |

||

sernet-samba-client-4.0.9-5.el6.x86_64 |

sernet-samba-client-4.0.9-5.el6.x86_64 |

||

sernet-samba-winbind-4.0.9-5.el6.x86_64 |

sernet-samba-winbind-4.0.9-5.el6.x86_64 |

||

First we need to install a couple of dependencies : |

|||

''yum install redhat-lsb-core cups-libs'' |

|||

First we need to install a couple of dependencies : |

|||

yum install redhat-lsb-core cups-libs |

|||

Then install the samba packages : |

Then install the samba packages : |

||

rpm -Uvh sernet-samba-* |

|||

''rpm -Uvh sernet-samba-*'' |

|||

We want to control samba from CTDB so stop the samba daemons from starting on boot on our servers : |

We want to control samba from CTDB so stop the samba daemons from starting on boot on our servers : |

||

chkconfig sernet-samba-nmbd off |

|||

chkconfig sernet-samba-smbd off |

|||

chkconfig sernet-samba-smbd off |

|||

''chkconfig sernet-samba-nmbd off'' |

|||

''chkconfig sernet-samba-smbd off'' |

|||

''chkconfig sernet-samba-smbd off'' |

|||

Install and configure CTDB |

===Install and configure CTDB=== |

||

Download the latest CTDB sources (2.4 at time of writing) onto the sambagpfs1 server from ftp://ftp.samba.org/pub/ctdb/ctdb-2.4.tar.gz . |

|||

extract the tar.gz to the gpfs file system /sambagpfs and on the sambagpfs1 server run |

|||

yum install autoconf |

|||

./autogen.sh |

|||

./configure |

|||

make |

|||

make install |

|||

Download the latest CTDB sources (2.4 at time of writing) onto the sambagpfs1 server from http://download.samba.org/pub/ctdb/ctdb-2.4.tar.gz . |

|||

Now run make install on the sambagpfs2 also. |

|||

Extract the tar.gz to the gpfs file system /sambagpfs and on the sambagpfs1 server run |

|||

''yum install autoconf'' |

|||

Create /etc/sysconfig/ctdb with the following contents |

|||

''./autogen.sh'' |

|||

CTDB_RECOVERY_LOCK=/sambagpfs/recovery.lck |

|||

CTDB_PUBLIC_INTERFACE=eth0 |

|||

CTDB_PUBLIC_ADDRESSES=/usr/local/etc/ctdb/public_addresses |

|||

CTDB_MANAGES_SAMBA=yes |

|||

CTDB_MANAGES_WINBIND=yes |

|||

CTDB_NODES=/usr/local/etc/ctdb/nodes |

|||

CTDB_SERVICE_WINBIND=sernet-samba-winbindd |

|||

CTDB_SERVICE_SMB=sernet-samba-smbd |

|||

CTDB_SERVICE_NMB=sernet-samba-nmbd |

|||

''./configure'' |

|||

''make'' |

|||

Next create the directory /usr/local/var |

|||

mkdir /usr/local/var |

|||

''make install'' |

|||

Then change directory to /usr/local/etc/ctdb/ and create the following files containing the following data |

|||

Now run make install on the sambagpfs2 also. |

|||

nodes |

|||

Create ''/etc/sysconfig/ctdb'' with the following contents |

|||

192.168.1.11 |

|||

192.168.1.12 |

|||

CTDB_RECOVERY_LOCK=/sambagpfs/recovery.lck |

|||

CTDB_PUBLIC_INTERFACE=eth0 |

|||

CTDB_PUBLIC_ADDRESSES=/usr/local/etc/ctdb/public_addresses |

|||

CTDB_MANAGES_SAMBA=yes |

|||

CTDB_MANAGES_WINBIND=yes |

|||

CTDB_NODES=/usr/local/etc/ctdb/nodes |

|||

CTDB_SERVICE_WINBIND=sernet-samba-winbindd |

|||

CTDB_SERVICE_SMB=sernet-samba-smbd |

|||

CTDB_SERVICE_NMB=sernet-samba-nmbd |

|||

public_addresses |

|||

Next create the directory /usr/local/var |

|||

10.10.23.48/24 eth0 |

|||

10.10.23.49/24 eth0 |

|||

''mkdir /usr/local/var'' |

|||

Also create a shared recovery file : |

|||

touch /sambagpfs/recovery.lck |

|||

Then change directory to /usr/local/etc/ctdb/ and create the following files containing the following data |

|||

Configuring Samba |

|||

''nodes'' |

|||

Edit the /etc/default/sernet-samba file and set the following parameter : |

|||

SAMBA_START_MODE="classic" |

|||

192.168.1.11 |

|||

192.168.1.12 |

|||

Now we need to create our smb.conf in /etc/samba. I have copied the contents of my smb.conf file below for reference : |

|||

''public_addresses'' |

|||

#===== Global Settings ============ |

|||

[global] |

|||

netbios name = smbgpfscluster |

|||

server string = Samba Version %v on $h |

|||

10.10.23.48/24 eth0 |

|||

workgroup = HOHO |

|||

10.10.23.49/24 eth0 |

|||

security = ADS |

|||

realm = HOHO.BALE.COM |

|||

Also create a shared recovery file : |

|||

# These were useful for debugging my initial setup but are probably too verbose for general use |

|||

log level = 3 passdb:3 auth:3 winbind:10 idmap:10 |

|||

''touch /sambagpfs/recovery.lck'' |

|||

idmap config *:backend = tdb2 |

|||

idmap config *:range = 1000-90000 |

|||

===Configuring Samba=== |

|||

winbind use default domain = yes |

|||

Edit the /etc/default/sernet-samba file and set the following parameter : |

|||

# Set these to no as it doesn't work well when you have thousands of users in your domain |

|||

winbind enum users = no |

|||

winbind enum groups = no |

|||

SAMBA_START_MODE="classic" |

|||

winbind cache time = 900 |

|||

winbind normalize names = no |

|||

clustering = yes |

|||

Now we need to create our smb.conf in /etc/samba. I have copied the contents of my smb.conf file below for reference : |

|||

unix extensions = no |

|||

mangled names = no |

|||

ea support = yes |

|||

store dos attributes = yes |

|||

map readonly = no |

|||

map archive = no |

|||

map system = no |

|||

#===== Global Settings ============ |

|||

force unknown acl user = yes |

|||

[global] |

|||

netbios name = smbgpfscluster |

|||

server string = Samba Version %v on $h |

|||

workgroup = HOHO |

|||

security = ADS |

|||

realm = HOHO.BALE.COM |

|||

# These were useful for debugging my initial setup but are probably too verbose for general use |

|||

log level = 3 passdb:3 auth:3 winbind:10 idmap:10 |

|||

idmap config *:backend = tdb2 |

|||

idmap config *:range = 1000-90000 |

|||

winbind use default domain = yes |

|||

# Set these to no as it doesn't work well when you have thousands of users in your domain |

|||

winbind enum users = no |

|||

winbind enum groups = no |

|||

winbind cache time = 900 |

|||

winbind normalize names = no |

|||

clustering = yes |

|||

unix extensions = no |

|||

mangled names = no |

|||

ea support = yes |

|||

store dos attributes = yes |

|||

map readonly = no |

|||

map archive = no |

|||

map system = no |

|||

force unknown acl user = yes |

|||

# Stuff necessary for guest logins to work where required |

|||

guest account = nobody |

|||

map to guest = bad user |

|||

#============ Share Definitions ============ |

|||

[gpfstest] |

|||

comment = GPFS Cluster on %h using %R protocol |

|||

path = /sambagpfs |

|||

writeable = yes |

|||

create mask = 0770 |

|||

force create mode = 0770 |

|||

locking = yes |

|||

vfs objects = gpfs fileid |

|||

# vfs_gpfs settings |

|||

gpfs:sharemodes = yes |

|||

gpfs:winattr = yes |

|||

nfs4:mode = special |

|||

nfs4:chown = yes |

|||

nfs4:acedup = merge |

|||

#some vfs related to clustering |

|||

fileid:algorithm = fsname |

|||

# Stuff necessary for guest logins to work where required |

|||

guest account = nobody |

|||

map to guest = bad user |

|||

===Notes on Samba config=== |

|||

This is a configuration for a AD domain member server. I did not have the necessary privileges on our AD to install RFC2307/SFU schema extensions . If you have such access then this would be a better way to proceed as you would have consistent UID/GID allocation between clusters. |

|||

#============ Share Definitions ============ |

|||

[gpfstest] |

|||

comment = GPFS Cluster on %h using %R protocol |

|||

path = /sambagpfs |

|||

writeable = yes |

|||

create mask = 0770 |

|||

force create mode = 0770 |

|||

locking = yes |

|||

Edit ''/etc/nsswitch.conf'' |

|||

vfs objects = gpfs fileid |

|||

passwd: files winbind |

|||

# vfs_gpfs settings |

|||

shadow: files |

|||

gpfs:sharemodes = yes |

|||

group: files winbind |

|||

gpfs:winattr = yes |

|||

nfs4:mode = special |

|||

nfs4:chown = yes |

|||

nfs4:acedup = merge |

|||

# some vfs related to clustering |

|||

fileid:algorithm = fsname |

|||

In /etc/krb5.conf file set your default realm |

|||

default_realm = HOHO.BALE.COM |

|||

Notes on Samba config |

|||

This is a configuration for a AD domain member server. I did not have the necessary privileges on our AD to install RFC2307/SFU schema extensions . If you have such access then this would be a better way to proceed as you would have consistent uid/gid allocation between clusters. |

|||

Now start the CTDB daemon on both servers. |

|||

Edit /etc/nsswitch.conf |

|||

passwd: files winbind |

|||

shadow: files |

|||

group: files winbind |

|||

In /etc/krb5.conf file set your default realm |

|||

''ctdbd --syslog --debug=3'' |

|||

default_realm = HOHO.BALE.COM |

|||

''run ctdb status'' |

|||

Now start the ctdb daemon on both servers. |

|||

ctdbd --syslog --debug=3 |

|||

run ctdb status |

|||

At this stage the nodes will report as unhealthy as winbind will not start as we have not joined the domain. So lets join the domain. |

At this stage the nodes will report as unhealthy as winbind will not start as we have not joined the domain. So lets join the domain. |

||

net ads join -U <some account with the necessary privileges> -d5 |

''net ads join -U <some account with the necessary privileges> -d5'' |

||

All going well you have successfully joined the domain if not the debug information will assist you in finding the issue. |

All going well you have successfully joined the domain if not the debug information will assist you in finding the issue. |

||

winbind will not have started successfully when we first started |

winbind will not have started successfully when we first started CTDB so we can start it manually now. |

||

service sernet-samba-winbindd start |

''service sernet-samba-winbindd start'' |

||

Check the winbind daemon has started : |

Check the winbind daemon has started : |

||

wbinfo -p |

|||

''wbinfo -p'' |

|||

and verify you have successfully joined the domain : |

and verify you have successfully joined the domain : |

||

wbinfo -t |

|||

''wbinfo -t'' |

|||

and can authenticate a user against the domain : |

and can authenticate a user against the domain : |

||

wbinfo -a <avaliddomainusername> |

|||

''wbinfo -a <avaliddomainusername>'' |

|||

In addition the following command should return valid user information |

|||

id <availdomainusername> |

|||

In addition the following command should return valid user information. |

|||

''id <vaildomainusername>'' |

|||

wbinfo -u should list the users on your domain . Our domain has 10’s of thousands of users so this may take some time. You may even have to to run run wbinfo a couple of times to get valid results. |

|||

ctdb ip should report the current assignment of our cluster ip’s . |

|||

The wbinfo -u command should list the users on your domain . Our domain has 10’s of thousands of users so this may take some time. You may even have to to run run wbinfo a couple of times to get valid results. |

|||

Now try to access the shares from windows. For some reason that i could not fathom i was getting an access denied when i tried to access that shares at this point. I rebooted both servers and this appeared to resolve the issue :). |

|||

The ''ctdb ip'' command should report the current assignment of our cluster ip’s . |

|||

Setting Some ACL’s |

|||

Now try to access the shares from windows. For some reason that I could not fathom I was getting an access denied when I tried to access that shares at this point. I rebooted both servers and this appeared to resolve the issue :). |

|||

You can use mmputacl to set ACL;s on the share . Create a text file like myperms.txt |

|||

#owner:root |

|||

#group:root |

|||

user::rwxc |

|||

group::rwx- |

|||

other::rwx- |

|||

mask::rwxc |

|||

user:root:rwx- |

|||

user:joebloggs:rwx- |

|||

group:root:rwx- |

|||

group:infosystems:rwx- |

|||

To apply these permissions : |

|||

mmputacl -i ~/perms.txt -i sambagpfs |

|||

or set the default permissions on a folder : |

|||

== GPFS ACL’s == |

|||

mmputacl -d -i ~/perms.txt /sambagpfs/folder |

|||

The only really tested GPFS ACLs style is -k nfsv4. |

|||

To test you permissions create a file in \\10.10.23.48\sambagpfs on the share from a windows machine. Now check the permissions of the file using mmgetacl newfile.txt command on the server. |

|||

==Configure your DNS Server== |

|||

You need to create a DNS alias that redirects requests to your samba cluster in a round robin fashion. So in our setup we need to create an alias the resolves to both 10.10.23.48 and 10.10.23.49 . |

|||

Configure your DNS Server |

|||

You need to create a dns alias that redirects requests to your samba cluster in a round robin fashion. So in our setup we need to create an alias the resolves to both 10.10.23.48 and 10.10.23.49 . |

|||

== Closing notes == |

|||

So there you have it. I hope this guide has been useful. Any constructive |

So there you have it. I hope this guide has been useful. Any constructive feedback is welcome to improve the guide, particularly from anybody who is running such a system in a production enviornment. |

||

For a complete open source solution you could replace GPFS for GlusterFS (which appears to be maturing nicely) or possibly OCFS2. Perhaps i will have time to test this some day but thats another days work. |

For a complete open source solution you could replace GPFS for GlusterFS (which appears to be maturing nicely) or possibly OCFS2. Perhaps i will have time to test this some day but thats another days work. |

||

Latest revision as of 06:25, 17 September 2018

Creating a Samba Cluster with CTDB and GPFS on CENTOS

Want to build a scalable networked storage system that is always available ?, A system that integrates with your Active Directory, supports SMB2 protocol and ACL's. Then read on...

Warning: Out of date!!!

Please note that this guide is very old and doesn't contain the latest instructions for configuration CTDB and Samba. For the parts covering CTDB and Samba specific configuration please follow the various sections under CTDB and Clustered Samba.

Assumed knowledge

This guide is written to assist a relatively inexperienced users through the setup of a fairly complex system in a step by step fashion. However, for the sake brevity I'm going to assume you are reasonably comfortable with GNU\Linux, can use Vim, Emacs or Nano. Have a grasp of basic networking etc.. and are not afraid of compiling some code :) . Elements of this setup are somewhat interchangeable. You could probably replace CENTOS with another distro but would subsequently need to be familiar enough with that distro to be able to modify the appropriate commands and paths. Others may wish to replace GPFS with alternate clustered file system or use a variation of my Samba configuration below. This setup has been tested in a non production environment. Deploying as such in a production environment is at your own risk and the author as such assumes no responsibility .

Preparing the Servers

This is a simple test setup. I’m going to use a couple of KVM VM’s but the same principles should apply on bare metal . This approach should scale to many physical/virtual servers . The diagram below illustrates our setup.

Install CENTOS 6.

First we want to install a couple of servers. I have chosen CENTOS 6 as it is binary compatible with RHEL 6 which is well supported by both GPFS and Samba. Create a couple of CENTOS 6 VM’s (I use virt-manager on CENTOS 6 but you can use any tools you like) . I use an ISO image CentOS-6.4-x86_64-minimal.iso. The VM will have 1 CPU and 1G RAM and an 8Gig disk . Initially I allocate 1 NIC but we will add a further NIC for a private LAN later.

Select all the usual defaults. Select the option to manually configure networking as we are going to configure this after install. Also make sure you install the SSH server. Once the servers are installed we need to set the IP address. You can do this by editing /etc/sysconfig/network-scripts/ifcfg-eth0 file and setting static IP addresses .These are the publicly accessible IP addresses of the servers. For reference here is the ifcfig-eth0 file on the sambagpfs1 server :

DEVICE=eth0 HWADDR=52:54:00:D1:C5:25 TYPE=Ethernet UUID=0b83419e-f28a-4a6d-84e5-64c813bf4f51 ONBOOT=yes NM_CONTROLLED=yes IPADDR="10.10.23.46" NETMASK="255.255.255.0" GATEWAY="10.10.23.253"

Don’t forget to set a valid DNS server in /etc/resolv.conf also. Once you're sure you have a working network connection, install the latest updates with the yum update command.

In virt-manager create a couple of 1GB IDE disks for our GPFS Cluster. When you try to add the disk to the 2nd server (sambagpfs2) virt-manager will give you a warning that “the disk is already in use by another guest” but this is OK. We are building a clustered file system where shared access to the underlying disks is necessary.

In a production scenario these disks would usually be shared LUN’s on a SAN. When you reboot your servers you should see the additional disks as reported by the dmesg command. You should see something like :

sd 0:0:0:0: [sda] 2048000 512-byte logical blocks: (1.04 GB/1000 MiB) sd 0:0:1:0: [sdb] 2048000 512-byte logical blocks: (1.04 GB/1000 MiB)

Make sure you can see both disks from both servers (sambagpfs1 and sambagpfs2).

Disable SELINUX and iptables

There appears to be a communication problem between the GPFS Daemons when SELINUX is enabled. Edit the /etc/selinux/config file and set SELINUX=disabled. Also stop the iptables server and disable it on restart.

service iptables stop chkconfig iptables off

It goes without saying that you need to consider these steps more carefully in a production environment.

Create an addition network card and set up password less login

Create an additional network card in virt-manager for each of our guests. These NIC’s are for communication (GPFS and CTDB Stuff) between the guests so we place them on the Virtual network ‘default’ NAT. Give the NIC’s sensible addresses, something like 192.168.1.x .. Create a file /etc/sysconfig/network-scripts/ifcfg-eth1 for the new network interface . Sample config below :

DEVICE=eth1 TYPE=Ethernet ONBOOT=yes NM_CONTROLLED=yes IPADDR="192.168.1.11" NETMASK="255.255.255.0"

Generate the ssh key with the ssh-keygen -t rsa command on sambagpfs1 and copy to the other server using the command ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.1.12 .

Also in the ~/.ssh folder run cat id_rsa.pub >> authorized_keys . This is necessary as our GPFS setup uses the ssh shell to execute commands locally as well as remotely. Otherwise we will be prompted for a login on the local machine when executing GPFS commands .

Now, perform the inverse of the above on the second server gpfstest2. You should now be able to zip between the two servers with the ssh <IP address> command. If not use the -v switch in the ssh command to debug.

Hostnames in hosts file

Add your hostnames and internal IP addresses to the /etc/hosts file . My settings displayed below for reference.

192.168.1.11 sambagpfs1 192.168.1.12 sambagpfs2 10.10.23.48 smbgpfscluster 10.10.23.49 smbgpfscluster

Keep time synchronised

Install the ntp service :

yum install ntp

Set your local ntp server in /etc/ntp.conf . Set service to start on boot :

chkconfig ntpd on

and start the service :

service ntpd start

Check the time is synchronised using the date command.

Now we are just about ready to begin installing GPFS :) .

Install and configure GPFS

Important !. Ensure you have the appropriate licensing before installing GPFS. For more information see http://publib.boulder.ibm.com/infocenter/clresctr/vxrx/index.jsp?topic=%2Fcom.ibm.cluster.gpfs.doc%2Fgpfs_faqs%2Fgpfsclustersfaq.html

Copy the appropriate GPFS related rpm’s onto your servers. You must install the base GPFS rpm’s and then install the patch rpm’s

Machine 1 - sambagpfs1

First of all we need to install some dependencies necessary to install GPFS and build the portability layer rpm :

yum install perl rsh ksh compat-libstdc++-33 make kernel-devel gcc gcc-c++ rpm-build

Now, install the GPFS base rpms :

rpm -ivh gpfs.*0-0*

then the patch rpm’s :

rpm -Uvh gpfs.*0-14*.rpm

Build the Portability layer :

yum install

make LINUX_DISTRIBUTION=REDHAT_AS_LINUX Autoconfig

make World

make rpm (Note it’s not best practice to build rpm as root)

This will build an rpm for the portability layer . The benefit of building this rpm is that we will not need as many prerequisites in our other cluster members.

Install the portability rpm.

rpm -Uvh /root/rpmbuild/RPMS/x86_64/gpfs.gplbin-2.6.32-358.18.1.el6.x86_64-3.4.0-14.x86_64.rpm

In general it is bad practice to build as root. See http://serverfault.com/questions/10027/why-is-it-bad-to-build-rpms-as-root . As this is a non production test system i’m going to give myself a pass (for the moment).

Finally, copy the portability rpm to the other cluster servers, in this case sambagpfs2 .

Machine 2 - sambagpfs2

Install some dependencies :

yum install perl rsh ksh compat-libstdc++-33

the base rpm’s :

rpm -ivh gpfs.*0-0*

the patch rpm’s :

rpm -Uvh gpfs.*0-14*.rpm

Install the portability RPM.

rpm -Uvh gpfs.gplbin-2.6.32-358.18.1.el6.x86_64-3.4.0-14.x86_64.rpm

Update your path to include the GPFS administration commands

Add the GPFS commands to the path (you don’t strictly need to do this but it makes administration of GPFS more convenient !). Edit you .bash_profile and set the path to something like :

PATH="/usr/lpp/mmfs/bin:${PATH}:$HOME/bin"

and run source .bash_profile to update your path

Creating the GPFS Cluster

Create a file gpfsnodes.txt containing information about our GPFS cluster nodes

sambagpfs1:manager-quorum: sambagpfs2:manager-quorum:

Create the test cluster using the mmcrcluster command.

mmcrcluster -N gpfsnodes.txt -p sambagpfs1 -s sambagpfs2 -r /usr/bin/ssh -R /usr/bin/scp -C SAMBAGPFS -A

This sets sambagpfs1 as the primary server and sambagpfs2 as the secondary server. -A specifies that the GPFS daemons start on boot.

Accept the license for you servers :

mmchlicense server --accept -N sambagpfs1

mmchlicense server --accept -N sambagpfs2

Use the mmlscluster command to verify that the cluster has been created correctly.

Now create our NSD’s for our two shared disks /dev/sda and /dev/sdb.

Firstly create two files, nsd.txt :

sda:sambagpfs1,sambagpfs2::dataAndMetadata:1:test01:system

and nsd2.txt

sdb:sambagpfs2,sambagpfs1::dataAndMetadata:1:test02:system

Now issue the command :

mmcrnsd -F nsd.txt

and

mmcrnsd -F nsd2.txt

Use the mmlsnsd command to verify creation.

Start up the cluster :

mmstartup -a

Check the cluster is up with mmgetstate -a

Create the GPFS file system :

mmcrfs /dev/sambagpfs -F nsd.txt -A yes -B 256K -n 2 -M 2 -r1 -R2 -T /sambagpfs

Add the second disk to the filesystem :

mmadddisk sambagpfs -F nsd2.txt

Use the mmlsnsd to verify that the two disk have been added to the cluster file system . You should see something similar to the following :

File system Disk name NSD servers --------------------------------------------------------------------------- sambagpfs test01 sambagpfs1,sambagpfs2 sambagpfs test02 sambagpfs2,sambagpfs1

We only have 2 nods in our GPFS Cluster so we need to set a tie breaker disk . Shut down GPFS to add the tie breaker disk.

mmshutdown -a

mmchconfig tiebreakerdisks=test01

To ensure recent versions of Microsoft Excel work correctly over SMB2 we also need to set the following configuration in GPFS (Many thanks to Dan Cohen at IBM - XIV for this tip).

mmchconfig cifsBypassShareLocksOnRename=yes -i

Start up our GPFS Cluster again :

mmstartup -a

Mount the GPFS filesystem :

mmmount sambagpfs -a

At this point you should see the GPFS file system mounted at /sambagpfs on both of our servers. Congratulations !. Take a break, stand up and walk around for a bit.

Install and Configure Samba and CTDB

I use the SerNet Samba 4 RPM’s from http://enterprisesamba.com/ . You have to register at the site (free) to download the RPM’s. There appears to be a problem with Windows 7 (which uses SMB2 if available on the server) on the latest release 4.0.10 so I am currently using the 4.0.9 release . I have yet to test with 4.1. Once you register you can find the 4.0.9 rpm’s at https://download.sernet.de/packages/samba/old/4.0/rpm/4.0.9-5/centos/6/x86_64/

You need to download the following rpm’s :

sernet-samba-libwbclient0-4.0.9-5.el6.x86_64 sernet-samba-libsmbclient0-4.0.9-5.el6.x86_64 sernet-samba-4.0.9-5.el6.x86_64 sernet-samba-common-4.0.9-5.el6.x86_64 sernet-samba-libs-4.0.9-5.el6.x86_64 sernet-samba-client-4.0.9-5.el6.x86_64 sernet-samba-winbind-4.0.9-5.el6.x86_64

First we need to install a couple of dependencies :

yum install redhat-lsb-core cups-libs

Then install the samba packages :

rpm -Uvh sernet-samba-*

We want to control samba from CTDB so stop the samba daemons from starting on boot on our servers :

chkconfig sernet-samba-nmbd off chkconfig sernet-samba-smbd off chkconfig sernet-samba-smbd off

Install and configure CTDB

Download the latest CTDB sources (2.4 at time of writing) onto the sambagpfs1 server from http://download.samba.org/pub/ctdb/ctdb-2.4.tar.gz . Extract the tar.gz to the gpfs file system /sambagpfs and on the sambagpfs1 server run

yum install autoconf

./autogen.sh

./configure

make

make install

Now run make install on the sambagpfs2 also.

Create /etc/sysconfig/ctdb with the following contents

CTDB_RECOVERY_LOCK=/sambagpfs/recovery.lck CTDB_PUBLIC_INTERFACE=eth0 CTDB_PUBLIC_ADDRESSES=/usr/local/etc/ctdb/public_addresses CTDB_MANAGES_SAMBA=yes CTDB_MANAGES_WINBIND=yes CTDB_NODES=/usr/local/etc/ctdb/nodes CTDB_SERVICE_WINBIND=sernet-samba-winbindd CTDB_SERVICE_SMB=sernet-samba-smbd CTDB_SERVICE_NMB=sernet-samba-nmbd

Next create the directory /usr/local/var

mkdir /usr/local/var

Then change directory to /usr/local/etc/ctdb/ and create the following files containing the following data

nodes

192.168.1.11 192.168.1.12

public_addresses

10.10.23.48/24 eth0 10.10.23.49/24 eth0

Also create a shared recovery file :

touch /sambagpfs/recovery.lck

Configuring Samba

Edit the /etc/default/sernet-samba file and set the following parameter :

SAMBA_START_MODE="classic"

Now we need to create our smb.conf in /etc/samba. I have copied the contents of my smb.conf file below for reference :

#===== Global Settings ============ [global] netbios name = smbgpfscluster server string = Samba Version %v on $h workgroup = HOHO security = ADS realm = HOHO.BALE.COM # These were useful for debugging my initial setup but are probably too verbose for general use log level = 3 passdb:3 auth:3 winbind:10 idmap:10 idmap config *:backend = tdb2 idmap config *:range = 1000-90000 winbind use default domain = yes # Set these to no as it doesn't work well when you have thousands of users in your domain winbind enum users = no winbind enum groups = no winbind cache time = 900 winbind normalize names = no clustering = yes unix extensions = no mangled names = no ea support = yes store dos attributes = yes map readonly = no map archive = no map system = no force unknown acl user = yes # Stuff necessary for guest logins to work where required guest account = nobody map to guest = bad user #============ Share Definitions ============ [gpfstest] comment = GPFS Cluster on %h using %R protocol path = /sambagpfs writeable = yes create mask = 0770 force create mode = 0770 locking = yes vfs objects = gpfs fileid # vfs_gpfs settings gpfs:sharemodes = yes gpfs:winattr = yes nfs4:mode = special nfs4:chown = yes nfs4:acedup = merge #some vfs related to clustering fileid:algorithm = fsname

Notes on Samba config

This is a configuration for a AD domain member server. I did not have the necessary privileges on our AD to install RFC2307/SFU schema extensions . If you have such access then this would be a better way to proceed as you would have consistent UID/GID allocation between clusters.

Edit /etc/nsswitch.conf

passwd: files winbind shadow: files group: files winbind

In /etc/krb5.conf file set your default realm

default_realm = HOHO.BALE.COM

Now start the CTDB daemon on both servers.

ctdbd --syslog --debug=3

run ctdb status

At this stage the nodes will report as unhealthy as winbind will not start as we have not joined the domain. So lets join the domain.

net ads join -U <some account with the necessary privileges> -d5

All going well you have successfully joined the domain if not the debug information will assist you in finding the issue.

winbind will not have started successfully when we first started CTDB so we can start it manually now.

service sernet-samba-winbindd start

Check the winbind daemon has started :

wbinfo -p

and verify you have successfully joined the domain :

wbinfo -t

and can authenticate a user against the domain :

wbinfo -a <avaliddomainusername>

In addition the following command should return valid user information.

id <vaildomainusername>

The wbinfo -u command should list the users on your domain . Our domain has 10’s of thousands of users so this may take some time. You may even have to to run run wbinfo a couple of times to get valid results.

The ctdb ip command should report the current assignment of our cluster ip’s .

Now try to access the shares from windows. For some reason that I could not fathom I was getting an access denied when I tried to access that shares at this point. I rebooted both servers and this appeared to resolve the issue :).

GPFS ACL’s

The only really tested GPFS ACLs style is -k nfsv4.

Configure your DNS Server

You need to create a DNS alias that redirects requests to your samba cluster in a round robin fashion. So in our setup we need to create an alias the resolves to both 10.10.23.48 and 10.10.23.49 .

Closing notes

So there you have it. I hope this guide has been useful. Any constructive feedback is welcome to improve the guide, particularly from anybody who is running such a system in a production enviornment.

For a complete open source solution you could replace GPFS for GlusterFS (which appears to be maturing nicely) or possibly OCFS2. Perhaps i will have time to test this some day but thats another days work.