Samba & Clustering

A while ago I mentioned that a number of us have been working on a new plan for clustered Samba. The document below is my write up of our current thoughts on how to make clustered Samba scalable, fast and robust.

This is the first public discussion of this plan, and I hope we'll get some useful feedback. It is quite complex in places, and I expect that it will need quite a few details filled in and quite a few things fixed as problems are found, but I also think it does provide a good basis for a great clustered Samba solution.

Cheers, Tridge

NOTE: A number of people are now working on an implementation of this proposal. See CTDB Project for details.

ctdb - Clustered trivial database

Andrew Tridgell Samba Team

version 0.3 November 2006

tdb has been at the the heart of Samba for many years. It is an extremely lightweight database originally modelled on the old-style Berkeley database API, but with some additional features that make it particularly suitable for Samba.

Over the last few years there has been a lot of discussion about the possibility of using Samba on tightly connected clusters of computers running a clustered filesystem, and inevitably those discussions revolve around ways of providing the equivalent functionality to tdb but for a clustered environment.

It was quickly discovered that a naive port of tdb to a cluster performed very badly, often resulting in negative scaling, sometimes by quite a large factor. These original porting attempts were based around using the coherence provided by the clustered filesystem through the fcntl() byte range locking interface as the core coherence method for a clustered tdb, and using the shared storage of files available in all clustered filesystems to store the tdb database itself.

My own efforts in this area largely consisted of talking to the developers of a number of clustered filesystems, and giving them simple test tools which demonstrated the lack of scalability of the operations we were relying upon. While this did lead to some improvements in some of the clustered filesystems, the performance was still a very long way from what we needed in really scalable clustered database solution.

vl-messaging

The first real step forward was the work done by Volker Lendecke and others in the 'vl-messaging' branch of Samba3. That code attempts to work around the performance limitations of clustered filesystems using a variety of techniques. The most significant of those techniques are:

1) changing the tdb API to use a fetch_locked() call paired with talloc destructor for unlock to reduce the number of round trips needed for the most common operation on the most contended databases.

2) The use of a 'dispatcher' process, separate from smbd, to handle communication between the database layers of the smbd daemons on each node.

3) The use of a 'file per key' design to minimise the contention on data within files, which especially helps on clustered filesystems which have the notion of a 'whole file lease' or token.

After some tuning the vl-messaging branch did show some degree of positive scaling, which was an enormous step forward compared to previous attempts. Unfortunately its baseline performance is still not nearly at the level we want.

Mainz plan

At a meeting in Mainz in July we did some experiments on the vl-messaging code, and came up with a new design that should alleviate to a large extent the remaining scalability and baseline performance problems.

The basis of the design is a significant departure from our previous attempts, in that we will no longer attempt to utilise the existing data and lock coherence mechanisms of the underlying clustered filesystem, but will instead build our own locking and transport layer independent of the filesystem.

Temporary databases

The design below is particularly aimed at the temporary databases in Samba, which are the databases that get wiped and re-created each time Samba is started. The most important of those databases are the 'brlock.tdb' byte range locking database and the 'locking.tdb' open file database. There are a number of other databases that fall into this class, such as 'connections.tdb' and 'sessionid.tdb', but they are of less concern as they are accessed much less frequently.

Samba also uses a number of persistent databases, such as the password database, which must be handled in a different manner from the temporary databases. The modification to the 'Mainz design' for those databases is discussed in a later section below.

What data can we lose?

One of the keys to understanding the new design that is outlined below is to see that Samba has quite different requirements regarding data integrity for the data in its temporary databases than most traditional clustered databases provide.

In a traditional clustered database or clustered filesystem with N nodes, the system tries to guarantee than even if N-1 of those nodes suddenly go down that no data is lost. Guaranteeing this data integrity is very expensive, and is a large part of why it is hard to make clustered databases and clustered filesystems scale and perform well.

So when we were trying to build Samba on top of a clustered filesystem we were automatically getting this data integrity guarantee. This usually meant that when we wrote data to the filesystem that the data had to go onto shared storage (a SAN or similar), or had to be replicated to all nodes. For user data written by Samba clients this is great, but for the meta data in our temporary databases it is completely unnecessary.

The reason it is unnecessary is twofold:

1) when a node goes down that holds the only copy of some meta data, its OK if that meta data only relates to open files or other resources opened by clients of that node. In fact, its preferable to lose that data, as when that node goes down those resources are implicitly released.

2) data associated with open resources on node A that is stored on node B can be recreated by node A, as node A already keeps internal data structures relating to the open resources of its clients.

Combining these two observations, we can see that we don't need, or in fact want, a clustered version of tdb in Samba for the temporary databases to provide the usual guarantees of data integrity.

In the extreme case where all nodes go down, the correct thing to happen to these temporary databases is that they be completely lost. A normal clustered database or clustered filesystem takes great steps to ensure that doesn't happen.

Where this really comes into play is in the description of the "ctdb recovery" below which handles the situation when one or more nodes go down.

Remote Operations

Another crucial thing to understand about our temporary databases is that the operations we need to perform are simple functions on the data in a individual record. For example, a very common operation would be a "conditional append", where data is appended to the existing record for a given key depending on the data meeting some function condition.

The 'function' varies between the different databases. In the case of the open file database, it involves checking that a new open doesn't conflict with an existing open.

Using the current Samba approach, this function would be run inside the smbd daemon where the file open request was received by the client. When we apply this to a clustered Samba server, that would imply that this conditional function would be run on a node that may not 'own' the data for the record. That would imply that the caller would have to obtain a remote lock on the record, then run the function, then update the record and release the lock.

This remote locking pattern would likely form one of the main bottlenecks in a clustered Samba solution. To avoid this bottleneck we will take a different approach in CTDB where we allow the function to be run remotely, on the node that holds the records data.

This makes CTDB much like a record-oriented RPC system.

Local storage

The first step in the design is to abandon the use of the underlying clustered filesystem for storing the tdb data in the temporary databases. Instead, each node of the cluster will have a local, old-style tdb stored in a fast local filesystem. Ideally this filesystem will be in-memory, such as on a small ramdisk, but a fast local disk will also suffice if that is more administratively convenient.

This local storage tdb will be referred to as the LTDB in the discussion below. The contents of this database on each node will be a subset of the records in the CTDB (clustered tdb).

Virtual Node Mapping

To facilitate automatic recovery when one or more nodes in the cluster dies, the CTDB will make use of a node numbering scheme which maps a 'virtual node number' (VNN) onto a physical node address in the cluster. This mapping will be stored in a file in a common location in the clustered filesystem, but will not rely on fast coherence in the filesystem. The table will be read only at startup, and when a transmission error or error return from the CTDB protocol indicates that the local copy of the node mapping may be out of date. The contents and format of this virtual node mapping table will be specific to the type of underlying clustering protocol in use by CTDB.

Extended Data Records

The records in the LTDB will be keyed in the same way they are for a normal tdb in Samba, but the data portion of each record will be augmented with an additional header. That header will contain the following additional information:

uint64 RSN (record sequence number) uint32 DMASTER (VNN of data master) uint32 LACCESSOR (VNN of last accessor) uint32 LACOUNT (last accessor count)

This augmented data will not visible to the users of the CTDB API, and will be stripped before the data is returned.

The purpose of the RSN (record sequence number) is to identify which of the nodes in the cluster has the most recent copy of a particular record in the database during a recovery after one or more nodes have died. It is incremented whenever a record is updated in a LTDB by the 'DMASTER' node. See the section on "ctdb recovery" for more information on the RSN.

The DMASTER ('data master') field is the virtual node number of the node that 'owns the data' for a particular record. It is only authoritative on the node which has the highest RSN for a particular record. On other nodes it can be considered a hint only.

One of the design constraints of CTDB is that the node that has the highest RSN for a particular record will also have its VNN equal to the local DMASTER field of the record, and that no other node will have its VNN equal to the DMASTER field. This allows a node to verify that it is the 'owner' of a particular record by comparing its local copy of the DMASTER field with its VNN. If and only if they are equal then it knows that it is the current owner of that record.

The LACCESSOR and LACOUNT fields form the basis for a heuristic mechanism to determine if the current data master should hand over ownership of this record to another node. The LACCESSOR field holds the VNN of the last node to request a copy of the record, and the LACOUNT field holds a count of the number of consecutive requests by that node. When LACOUNT goes above a configurable threshold then a record transfer will be used in response to a record request (see the sections on record request and record transfer below).

It is worth noting that this heuristic mechanism is very primitive, and suffers from the problem that frequent remote reads of records will require that the data master write frequently to update these fields in the LTDB. The use of a ramdisk for the LTDB will reduce the impact of these writes, but it still is likely that this heuristic mechanism will need to be improved upon in future revisions of this design.

Location Master

In addition to the concept of a DMASTER (data master), each record will have an associated LMASTER (location master). This is the VNN of the node for each record that will be referred to when a node wishes to contact the current DMASTER for a record. The LMASTER for a particular record is determined solely by the number of virtual nodes in the cluster and the key for the record.

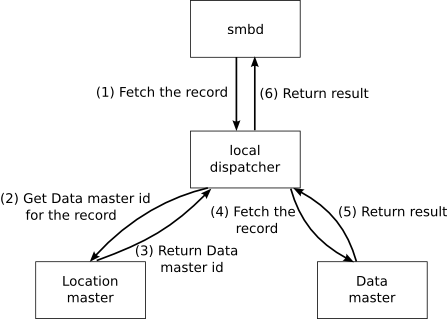

Finding the DMASTER

When a node in the cluster wants to find out who the DMASTER is for a record, it first contacts the LMASTER, which will reply with the VNN of the DMASTER. The requesting node then contacts that DMASTER, but must be prepared to receive a further redirect, because the value for the DMASTER held by the LMASTER could have changed by the time the node sends its message.

This step of returning a DMASTER reply from the LMASTER is skipped when the LMASTER also happens to be the DMASTER for a record. In that case the LMASTER can send a reply to the requesters query directly, skipping the redirect stage.

It should also be noted that nodes should have a small cache of DMASTER location replies, and can use this cache to avoid asking the LMASTER every time for the location of a particular record. In that case, just as in the case when they get a reply from the LMASTER, they must be prepared for a further redirect, or errors. If they get an error reply, then depending on the type of error reply they will either go back to the LMASTER or will initiate a recovery process (see the section on error handling below).

Common fetching sequence:

(image source -- http://samba.org/~ab/dralex/sequence.svg)

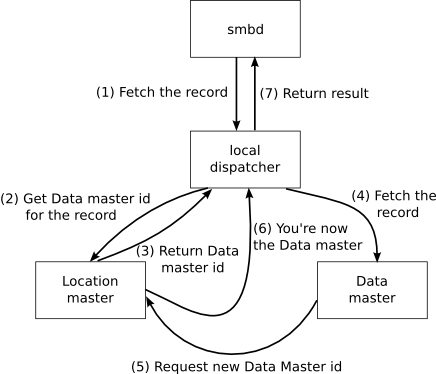

Fetching sequence with data master transition:

Clustered TDB API

The CTDB API is a small extension to the existing tdb API, and draws on ideas from the vl-messaging code.

The main calls will be:

struct ctdb_call {

TDB_DATA key; /* record key */

TDB_DATA record_data; /* current data in the record */

TDB_DATA *new_data; /* optionally updated record data */

TDB_DATA *call_data; /* optionally passed from caller */

TDB_DATA *reply_data; /* optionally returned by function */

};

/* initialise a ctdb context */ struct ctdb_context *ctdb_init(TALLOC_CTX *mem_ctx);

/* a ctdb call function */ typedef int (*ctdb_fn_t)(struct ctdb_call *);

/* setup a ctdb call function */ int ctdb_set_call(struct ctdb_context *ctdb, ctdb_fn_t fn, int id);

/* attach to a ctdb database */ int ctdb_attach(struct ctdb_context *ctdb, const char *name, int tdb_flags,

int open_flags, mode_t mode);

/* make a ctdb call. The associated ctdb call function will be called on the DMASTER for the given record */ int ctdb_call(struct ctdb_context *ctdb, TDB_DATA key, int call_id, TDB_DATA *call_data, TDB_DATA *reply_data);

NOTE: above API still needs a lot more development

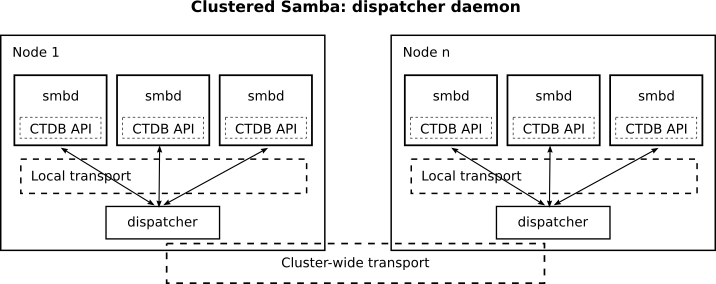

Dispatcher Daemon

Coordination between the nodes in the cluster will happen via a 'dispatcher daemon'. This daemon will listen for CTDB protocol requests from other nodes, and from the local smbd via a unix domain datagram socket.

The nature of the protocol requests described below mean that the dispatcher daemon will need to be written in an event driven manner, with all operations happening asynchronously.

Dispatcher daemon in clustered system:

(image source -- http://samba.org/~ab/dralex/clustered_samba_dispatcher.svg)

LTDB Bypass

Experiments show that sending a request via the dispatcher daemon will add about 4us on a otherwise idle multi-processor system (as measured on a Linux 2.6 kernel with dual Xeon processors). On a busy system or on a single core system this time could rise considerably, making it preferable for the dispatcher daemon to be avoided in common cases.

This will be achieved by allowing smbd to directly attach to the LTDB, and to perform calls directly on the LTDB once it determines (with the record lock held) that the local node is in fact the DMASTER for the record.

This means that if a record is frequently accessed by one node then it will migrate via the DMASTER heuristics to requesting node, and from then on that client will have direct local access to the record.

CTDB Protocol

The CTDB protocol consists of the following message types:

CTDB_REQ_CALL CTDB_REPLY_CALL CTDB_REPLY_REDIRECT CTDB_REQ_DMASTER CTDB_REPLY_DMASTER CTDB_REPLY_ERROR

additional message types will be used during node recovery after one

or more nodes have crashed. They will be dealt with separately below.

CTDB protocol header

Every CTDB_REQ_* packet contains the following header:

uint32 OPERATION (CTDB opcode) uint32 DESTNODE (destination VNN) uint32 SRCNODE (source VNN) uint32 REQID (request id) uint32 REQTIMEOUT (request timeout, milliseconds)

Every CTDB_REPLY_* packet contains the following header:

uint32 OPERATION (CTDB opcode) uint32 DESTNODE (destination VNN) uint32 SRCNODE (source VNN) uint32 REQID (request id)

The DESTNODE and SRCNODE are used to determine if the virtual node mapping table has been updated. If a node receives a message which is not for one of its own VNN numbers then it will send back a reply with a CTDB_ERR_VNN_MAP status.

The REQID is used to allow a node to have multiple outstanding requests on an unordered transport. A reply will always contain the same REQID as the corresponding request. It is up to each node to keep track of what REQID values are pending.

The REQTIMEOUT field is used to determine how long the receiving node should keep trying to complete the request if the record is currently locked by another client. A value of zero means to fail immediately if the record is locked.

CTDB_REQ_CALL

uint32 CALLID uint32 KEYLEN uint32 CALLDATALEN uint8 KEY[] uint8 CALLDATA[]

A CTDB_REQ_CALL request is the generic remote call function in CTDB. It is used to run a remote function (identified by CALLID) on a record in the database.

The KEYLEN/KEY parameters specify the record to be operated on. The CALLDATALEN/CALLDATA parameters are optional additional data passed to the remote function.

A server receiving a CTDB_REQ_CALL must send one of the following replies:

1) a CTDB_REPLY_REDIRECT if it is not the DMASTER for the given

record (as determined by the key)

2) a CTDB_REPLY_CALL if it is the DMASTER for the node, and wants

to keep the DMASTER role for the time being.

3) a CTDB_REQUEST_DMASTER if it wishes to hand over the DMASTER role

for the record to the requesting node.

The following errors can be generated:

CTDB_ERR_VNN_MAP CTDB_ERR_TIMEOUT

The 3rd type of reply is special, as it is sent not to the requesting node, but to the LMASTER. It is a message that tells the LMASTER that this node no longer wants to be the DMASTER for a record, and asks the LMASTER to grant the DMASTER status to another node.

When the LMASTER receives a CTDB_REQUEST_DMASTER request from a node, it will in turn send a CTDB_REPLY_DMASTER reply to the original requesting node. Also see the section on "dmaster handover" below.

CTDB_REPLY_CALL

uint32 DATALEN uint8 DATA[]

A CTDB_REPLY_CALL contains any data returned from the remote function call of a CTDB_REQ_CALL request.

CTDB_REQ_DMASTER

uint32 DMASTER (VNN of requested new DMASTER) uint32 KEYLEN uint8 KEY[] uint32 DATALEN uint8 DATA[]

The CTDB_REQ_DMASTER request is unusual in that it is always sent to the LMASTER, and may be sent in response to a different request from another node. It asks the LMASTER to hand over the DMASTER status to another node, along with the current data for the record.

If LMASTER is equal to the requested new DMASTER then no further packets need to be sent, as the LMASTER has now become the DMASTER. If the requested DMASTER is not equal to the LMASTER then the LMASTER will send a CTDB_REPLY_DMASTER to the new requested DMASTER.

This message will have the same REQID as the incoming message that triggered it.

CTDB_REPLY_DMASTER

uint32 DATALEN uint8 DATA[]

This message always comes from the LMASTER, and tells a node that it it is now the DMASTER for a record.

This message will have the same REQID as the incoming CTDB_REQ_DMASTER that triggered it. The key is not needed as the node has that REQID and knows the key already.

CTDB_REPLY_REDIRECT

uint32 DMASTER

A CTDB_REPLY_REDIRECT is sent when a node receives a request for a record for which it is not the current DMASTER. The DMASTER field in the reply is a hint to the requester giving the next DMASTER it should try. If no reasonable DMASTER value is known then the LMASTER is specified in the reply.

CTDB_REPLY_ERROR

uint32 STATUS uint32 MSGLEN uint8 MSG[]

All errors from CTDB_REQ_* messages are sent using a CTDB_REPLY_ERROR reply. The STATUS field indicates the type of error, from the CTDB_ERR_* range of errors. The MSG field is an optional UTF8 encoded string that can provide additional human readable information regarding the error.

The possible error codes are:

CTDB_ERR_NOT_DMASTER CTDB_ERR_NOT_LMASTER CTDB_ERR_VNN_MAP CTDB_ERR_INTERNAL_ERROR CTDB_ERR_NO_MEMORY

Call Usage

The CTDB_REQ_CALL request replaces several other calls in version 0.2 of this document, but it may not be obvious at first glance how the functions in the 0.2 protocol map onto the CTDB_REQ_CALL approach.

A good example is a simple 'fetch' of a record. In version 0.2 of the protocol there was a separate CTDB_REQ_FETCH request, and associated CTDB_REPLY_FETCH reply. To get the same effect with a CTDB_REQ_CALL you would setup a remote function like this:

static int fetch_func(struct ctdb_call *call)

{

call->reply_data = &call->record_data;

return 0;

}

then register it like this:

ctdb_set_call(ctdb, fetch_func, FUNC_FETCH);

and make the call like this:

ctdb_call(ctdb, key, FUNC_FETCH, NULL, &data);

At the CTDB protocol level, this results in a CTDB_REQ_CALL request, with a CALLID of FUNC_FETCH (an integer) and a CALLDATALEN of zero, indicating that no additional data is passed in the call.

The call->reply_data = &call->record_data expression sets the reply data for the call to the current contents of the record. This results in a CTDB_REPLY_CALL protocol request containing the records data.

CTDB Recovery

Database recovery when one or more nodes go down is crucial to the robustness of the cluster. In CTDB, recovery is triggered when one of the following things happens:

1) a reply to a CTDB message is not received after a reasonable time.

2) an admin manually requests that one or more nodes be removed from the cluster, or added to the cluster

3) a CTDB_ERR_VNN_MAP error is received, indicating that either the sending or receiving node has the wrong VNN map

A node that detects one of these conditions starts the recovery process. It immediately stops processing normal CTDB messages and sends a message to all nodes starting a global recovery. I have not yet worked out the precise nature of these messages (that should appear in a later version of this document), but some basics are clear:

1) the recovery process needs to assign every node a new VNN, and will choose VNNs that are different from all the VNNs currently in use. This is important to ensure that none of the old VNNs remain valid, so we can detect when a 'zombie' node that is non-responsive during recovery starts sending messages again. When such a node wakes up it will trigger a CTDB_ERR_VNN_MAP message as soon as it tries to send a CTDB message.

2) There will be one 'master' node controlling the recovery process. We need to determine how this node is determined, probably using the lowest numbered physical node that is currently operational.

3) at the end of recovery there needs to be a global ACK that the recovery has concluded before normal CTDB messages start again.

4) nodes will need to look through their data structures of open resources to recover the pieces of the data for each record. These will then be pieced together with a mechanism very similar to a CTDB_REQ_CONDITIONAL_APPEND.

5) there will need to be a callback function to allow the database to get at the data from (4).

In version 0.1 of this document it was envisioned that the recovery would be based solely on the RSN, rolling back each record to the record with the highest RSN for that record across the cluster. While I think that mechanism would still work, it is harder to prove it is correct in all cases than a mechanism based on nodes re-supplying their own pieces of the data for each record.

TODO

Lots more to work out ...

- Recovery protocol details

- What if CTDB_REQ_DMASTER message is lost?

- explain how deleted records are handled in recovery (hint: the client supplied data recovery ends up with an empty record)

- Client based recovery - highest seqnum, plus any actively locked records versus structure driven recovery.

- Need to work out the consequences of message loss for each of the message types. Especially replies.

- What events system to use?

- explain recovery model, with deliberate rollback of records

- what transport API to use? Standardise on MPI? Use a lower level API? Use a transport abstraction? What is the latency cost of an abstraction?

- how to integrate event handling with the transport? Pull Samba4 events system into tdb?

Credits

This work is the result of many long discussions and experiments by Volker Lendecke, Sven Oehme, Alexander Bokovoy, Aleksey Fedoseev and Andrew Tridgell